In Lecture 7 of Big Data in 30 hours lecture series, we introduce Apache Spark. The purpose of this memo is to serve to the students as a reference of some of the concepts learned.

About Spark

Spark, managed by Apache Software Foundation, is an open source framework that facilitates distributed computations. It is lately quite popular. With Spark, one can set up a set of machines to run tasks in a coordinated way. Spark builds on the success of its predecessor Hadoop, and often uses Hadoop’s HDFS as the underlying storage.

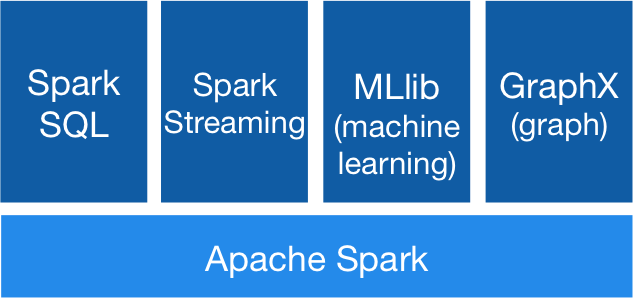

Main components

- Spark SQL – for SQL-like constructs

- Spark Streaming – for real-time processing

- MLib – machine learning library

- GraphX – for graph processing

- Spark core are the libraries providing core abstractions such as RDD

- Schedulers: YARN and Mesos

- Shells:

Python (pyspark)

Scala (spark-shell)

R (sparkR)

Lecture notes: Introduction to Apache Spark