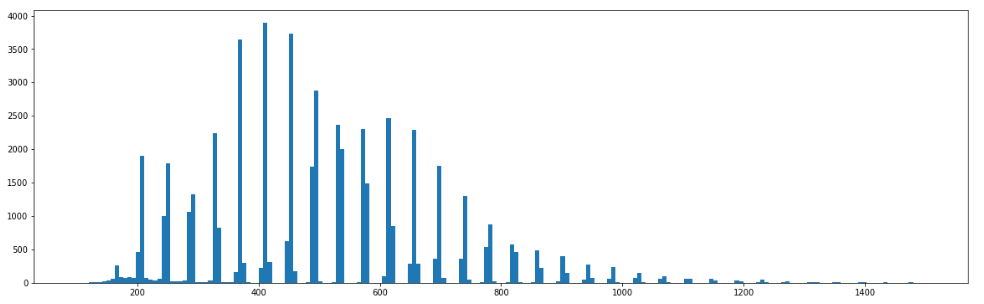

Here is a humorous recent example of what one can achieve with basic data exploration, without even going into any advanced ML techniques. In this recent project I was asked to study response time log of an online service running

Taking advantage of Machine Learning (ML), without even starting